November 14, 2018

Virtual production: Digital Domain talks digital humans and more

Imagine a film set where completely photorealistic digital human avatars mirror the precise actions and expressions of the live actors playing their roles—all in a virtual space and in real time, at up to 90 fps. For directors, producers, and actors alike, this technology will be a huge game-changer, and it’s already being prototyped today.

In the latest episode of our podcast series, Digital Humans: Actors and Avatars, fxguide’s Mike Seymour (who has himself been digitally cloned in the name of research) interviews Digital Domain’s Scott Meadows, Head of Visualization, and Doug Roble, Senior Director of Software R&D, about the studio’s trailblazing work in the area of virtual production and digital humans.

To kick things off, Meadows explains how the original toolset he assembled for previs on Tron is constantly evolving, as the capabilities of both graphics hardware and real-time engines advance to states that were unthinkable a few years ago. Having honed his tools and techniques on Beauty and the Beast, Black Panther, and Ready Player One, Meadows is now approaching a point where—instead of creating rough 3D models for previs and then throwing them away—assets can move through the pipeline from previs, to postvis, and right through to full VFX post-production.

Diving deep on digital humans

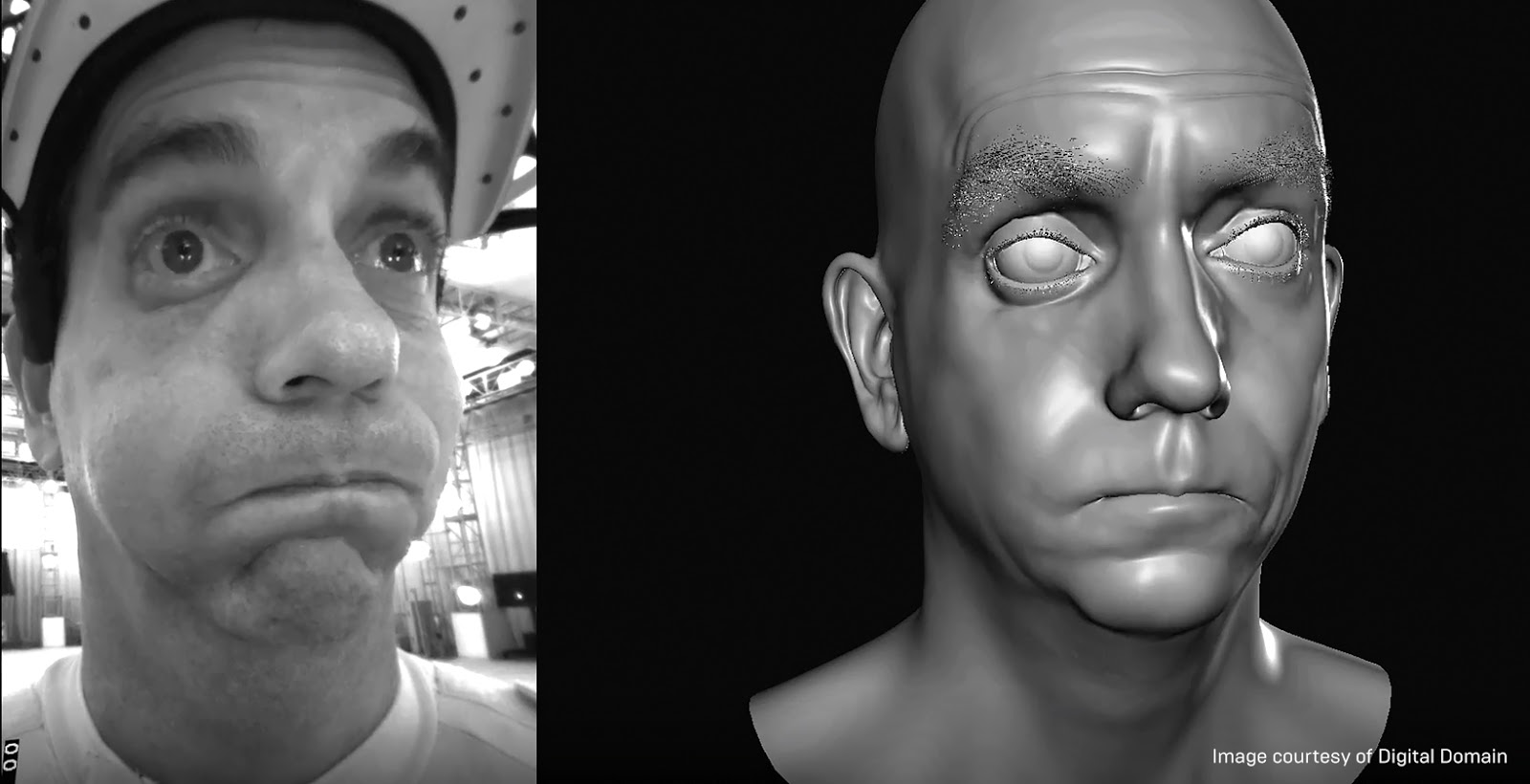

The director’s ability to see CG elements on set while shooting the live-action components is fundamentally liberating. But what if they could see the actual, final-quality CG characters reacting in real time to actors’ input, so they could judge the performance of the character, and not the actor? Or even take over the acting themselves to give direction?That’s what Roble set out to explore with his ‘Digital Doug’ project. He undertook to create a real-time, highest-resolution, markerless, minimally rigged, single-camera facial animation system (no small challenge!), using himself as the guinea pig. To achieve this, he had to combine several existing techniques with some proprietary special sauce, before turning to machine learning to complete the picture.

First, ultra-high-resolution scans were taken in several poses at the USC Institute for Creative Technologies’ Light Stage. These scans deliver incredible photorealism as still images, but have no temporal coherence—there’s no way to move from one pose to another without the textures swimming.

Next, Roble turned to Dimensional Imaging (DI4D) to get scanned data of himself that was temporally coherent, albeit at a lower resolution.

The third step saw Roble taking to Digital Domain’s traditional motion capture stage with his face adorned with 160 markers, to capture full-motion animation for a range of emotions and facial positions. But with only 160 vertices, the meshes this produced did not deliver sufficient resolution for photorealism.

Not to be deterred, Roble was then able to use machine learning within Digital Domain’s own Masquerade software—first used on Avengers: Infinity War—to take information from the Light Stage and DI4D scans and use it to up-res the motion capture data to 80,000 vertices. These high-resolution meshes could then be used to create blendshape rigs that animators could work with in a traditional manner.

But that’s not all the system could do. Roble was able to use it to create 20,000 pairs of very high-resolution images and meshes for a wide range of poses. The final step was to feed this data to machine learning algorithms, and use it to train the system. At the end of this training period, the system was able to compute the right combination of high-resolution textures and mesh for any live-action input at speeds as high as 90 fps. So whatever Doug does, digital Doug (or another CG character based on Doug) can now do too—in real time. And, not to be outdone, Unreal Engine is able to keep up with the rendering side of things.

Join the conversation

Are you a visual disruptor? Curious to delve deeper into virtual production? Join the conversation now. Subscribe here, and we’ll send you an email when we post something new in this exciting series.Also, stop in at our new virtual production hub to catch up on more insightful articles, videos, and other content.