Real-time explainers

What is real-time ray tracing, and why should you care?

Courtesy of Epic Records

For the last three or four years, you may have been hearing a lot about real-time ray tracing. You may have been told by your friends that you really need to get hold of a coveted NVIDIA RTX graphics card for your next PC or laptop. But you may not be entirely clear what all the fuss is about. If that’s the case, this article is for you.

What is ray tracing in the first place?

So let’s start with the basics. We’ll define ray tracing at a very high level, and look at how it differs from rasterization, the technology it is in many cases superseding.

Both rasterization and ray tracing are rendering methods used in computer graphics to determine the color of the pixels that make up the image displayed on your screen, or created on your hard drive when you press the Render button.

Rasterization works by drawing the objects in a scene from back to front, mapping 3D objects to the 2D plane via transformation matrices. It determines the color of each pixel based on information (color, texture, normal) stored on the mesh (model), combined with the lighting in the scene. It is generally much faster than ray tracing, but cannot simulate effects that rely on bounced light, like true reflections, translucency, and ambient occlusion.

Both rasterization and ray tracing are rendering methods used in computer graphics to determine the color of the pixels that make up the image displayed on your screen, or created on your hard drive when you press the Render button.

Rasterization works by drawing the objects in a scene from back to front, mapping 3D objects to the 2D plane via transformation matrices. It determines the color of each pixel based on information (color, texture, normal) stored on the mesh (model), combined with the lighting in the scene. It is generally much faster than ray tracing, but cannot simulate effects that rely on bounced light, like true reflections, translucency, and ambient occlusion.

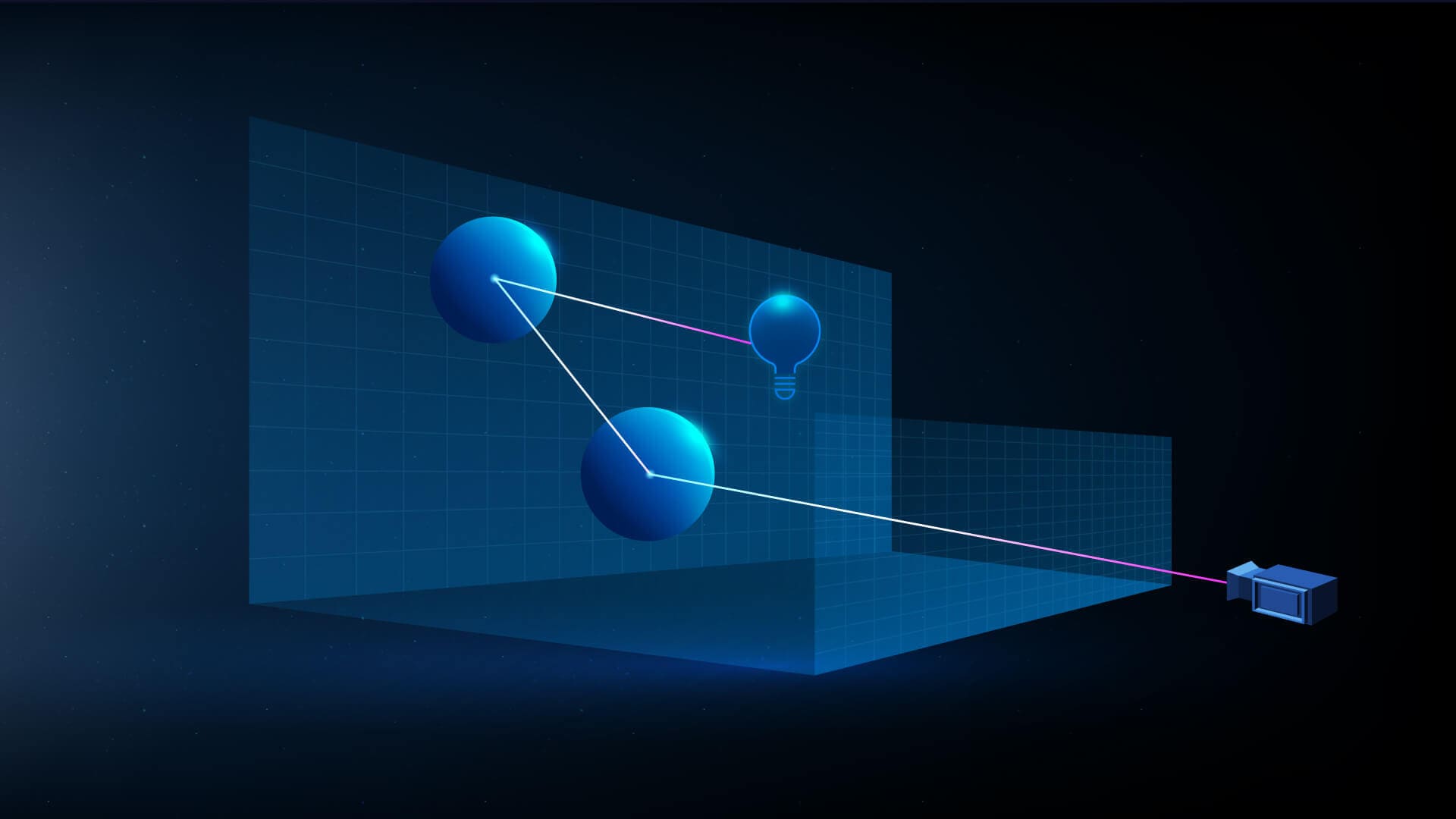

Ray tracing, on the other hand, works by casting a ray out from the point of view of the camera and tracing its path as it bounces around objects in the scene until it reaches a light source, collecting and depositing color as it goes. Because it mimics the physical behavior of light rays, it delivers much higher-quality, more photorealistic results than rasterization, like soft, detailed shadows, ambient occlusion, and accurate refractions and reflections. All these benefits conventionally come at a cost, however: speed.

What is real-time rendering?

When you watch a film at the theater—whether it’s a live-action production with real actors and environments or a computer-generated feature—what your brain perceives as continuous motion is actually 24 separate frames being played in succession for every second of footage.

For computer-generated films, each of those frames must be rendered; that is, the computer must calculate what each pixel that makes up the frame should look like. In traditional computer graphics (CG) pipelines, this is done via an offline renderer, where it is not uncommon for a single frame to take hours, or even days to render to achieve the desired resolution and quality.

However, for games or other interactive applications where there is no way of knowing in advance what the camera will see at each moment, frames must be rendered at the same speed they are to be played back—that is, in real time. Games now require 60 or even 120 frames to be rendered every second, which works out as just a few milliseconds per frame.

Rasterization is fast enough that it has been able to be used for real-time rendering in games—albeit with many clever tricks and compromises—for decades. But until recently, ray tracing had been used only for offline rendering. The only use of ray tracing in games was for cinematics (also known as cutscenes), which, like films, are rendered in advance and then simply played back within the game—you can’t interact with them.

For computer-generated films, each of those frames must be rendered; that is, the computer must calculate what each pixel that makes up the frame should look like. In traditional computer graphics (CG) pipelines, this is done via an offline renderer, where it is not uncommon for a single frame to take hours, or even days to render to achieve the desired resolution and quality.

However, for games or other interactive applications where there is no way of knowing in advance what the camera will see at each moment, frames must be rendered at the same speed they are to be played back—that is, in real time. Games now require 60 or even 120 frames to be rendered every second, which works out as just a few milliseconds per frame.

Rasterization is fast enough that it has been able to be used for real-time rendering in games—albeit with many clever tricks and compromises—for decades. But until recently, ray tracing had been used only for offline rendering. The only use of ray tracing in games was for cinematics (also known as cutscenes), which, like films, are rendered in advance and then simply played back within the game—you can’t interact with them.

What made real-time ray tracing possible?

So what changed? In 2018, two concurrent technology catalysts emerged: Microsoft’s DXR (DirectX Raytracing) framework, and NVIDIA’s RTX platform. Working with NVIDIA and ILMxLAB, Epic Games was able to show real-time ray tracing for the first time at GDC in March of 2018 with the Reflections tech demo, which featured characters from Star Wars: The Last Jedi.

The piece demonstrated textured area lights, ray-traced area light shadows, ray-traced reflections, ray-traced ambient occlusion, cinematic depth of field, and NVIDIA real-time denoisers—all running in real time.

These were early days. The prototype Unreal Engine code was not yet available to users; the hardware required to run the demo in real time consisted of an NVIDIA DGX Station with four NVIDIA Volta GPUs, with a price tag somewhere in the region of $70,000—so neither the software nor the hardware was exactly what you could call accessible to the average user.

These were early days. The prototype Unreal Engine code was not yet available to users; the hardware required to run the demo in real time consisted of an NVIDIA DGX Station with four NVIDIA Volta GPUs, with a price tag somewhere in the region of $70,000—so neither the software nor the hardware was exactly what you could call accessible to the average user.

Is real-time ray tracing for everyone?

Fast forward to August 2018 at Gamescom, where NVIDIA showed Reflections running in real time on just one of its newly announced $1,200 GeForce RTX 2080 Ti GPUs.

At GDC in March the following year, Epic Games made its real-time ray tracing technology available as a Beta offering in Unreal Engine 4.22. It used a hybrid approach that coupled ray tracing capabilities for those passes that benefited from them with traditional raster techniques for those that didn’t, enabling it to achieve real-time results.

As part of the announcement, Goodbye Kansas and Deep Forest Films demonstrated how far the software had come in 12 months with Troll, a real-time cinematic featuring a digital human.

At GDC in March the following year, Epic Games made its real-time ray tracing technology available as a Beta offering in Unreal Engine 4.22. It used a hybrid approach that coupled ray tracing capabilities for those passes that benefited from them with traditional raster techniques for those that didn’t, enabling it to achieve real-time results.

As part of the announcement, Goodbye Kansas and Deep Forest Films demonstrated how far the software had come in 12 months with Troll, a real-time cinematic featuring a digital human.

Today, with Unreal Engine 5, Epic’s real-time ray tracing software has received many upgrades to its performance, stability, and feature completeness, and RTX graphics cards capable of running it in real time can be had for under $500. Meanwhile, Unreal Engine is absolutely free to download and get started; combined with the lower price point for RTX graphics cards, this means that real-time ray tracing is within reach of a much broader spectrum of users.

What are the benefits of ray tracing?

Let’s take a look at some of the actual benefits of ray tracing, whether real-time or offline.

Accurate reflections add a lot to a scene’s credibility. Ray-traced reflections can support multiple bounces, which means that they can create interreflections for reflective surfaces, just as in real life. They also reflect objects outside of the field of view. For automotive designers and marketers, the way that light reflects off a surface is of paramount importance; ray tracing enables them to visualize that before the car is built.

Accurate reflections add a lot to a scene’s credibility. Ray-traced reflections can support multiple bounces, which means that they can create interreflections for reflective surfaces, just as in real life. They also reflect objects outside of the field of view. For automotive designers and marketers, the way that light reflects off a surface is of paramount importance; ray tracing enables them to visualize that before the car is built.

Courtesy of GIANTSTEP

Ray-traced shadows can simulate soft area lighting effects for objects in the environment. This means that based on the light's source size or source angle, an object's shadow will have sharper shadows near the contact surface than farther away, where it softens and widens. As well as grounding objects in the scene, ray-traced shadows capture fine details in areas like upholstery, making interiors much more convincing. For architects, ray-traced shadows also enable accurate sun studies.

Global illumination simulates how light’s natural interaction with objects affects the color of other objects in the scene, taking into account the absorption and reflectiveness of the materials the objects are made of. It greatly increases the realism of scenes. With ray tracing, global illumination is computed for each frame, rather than being pre-baked, meaning that lighting can change over time, such as a light being turned on or off, or the sun rising, or someone opening a door. Unreal Engine’s Lumen is a fully dynamic global illumination and reflections system that uses multiple ray tracing methods.

Ambient occlusion is the shadowing of areas that naturally block ambient lighting (lighting that does not come directly from a light source), such as the corners and edges where walls meet or the crevices and wrinkles in skin. Ray-traced ambient occlusion is much more accurate (and therefore, believable) than screen-space ambient occlusion.

For exterior renderings, or interiors with large windows, accurately depicting light from the sky is essential to creating a believable scene. With ray tracing, you can use a high dynamic range (HDR) image to light your scene, producing subtle, soft effects that effectively mimic the real world.

Courtesy of ARCHVYZ, design by Toledano Architects

In addition to all of these benefits, ray tracing is generally much easier to set up and use than the methods used to approximate some of these effects in raster renderers, such as depth-map shadows, baked global illumination, and reflection probes or planes.

Who is using real-time ray tracing?

The goal of many game developers is to make their experiences more and more believable, so for them real-time ray tracing is a natural fit. But because AAA games typically have a three- to four-year production cycle, players have had to wait patiently to reap the benefits of the new technology. Today, however, the latest batch of gorgeous-looking games that use real-time ray tracing is rapidly growing, and includes such titles as Deliver Us Mars, Ghostwire: Tokyo, and Hellblade: Senua’s Sacrifice. There are many more titles in the works.

As well as games, real-time ray tracing in Unreal Engine is used for architectural visualization, such as in this interactive sales tool from Buildmedia; automotive visualization, like this VR design review tool from Subaru; virtual production for film and television, including this example of how Volkswagen is making greener car commercials; and other real-time applications. Any interactive application that strives for realism benefits from real-time ray tracing, from virtual concerts to online fashion fitting and beyond. By simulating the natural behavior of light, real-time ray-traced experiences are far more immersive and believable.

Courtesy of Epic Records

Real-time ray tracing is also shaking up the world of animated content creation for film and TV, as evidenced by Studio EON’s ‘Armored Saurus’ series, which also employed virtual production techniques. When you can render in a fraction of the time it would take in a traditional pipeline and still have the look your audience demands, you not only save on production costs, but you can iterate right up to the last minute to find the best version of your story.

Courtesy of Armored Saurus | Studio EON

Getting started with real-time ray tracing

If you’re keen to try out real-time ray tracing in Unreal Engine for yourself, you can download the software for free, and visit the documentation to check out the system requirements. You can also find out how to turn on real-time ray tracing, and learn more about the features.

If you prefer video tutorials, head on over to the Epic Developer Community, where you’ll find hundreds of hours of free online learning, including these courses on ray tracing.

In addition, you can learn about another ray-tracing technique that’s available in Unreal Engine called path tracing, a progressive, hardware-accelerated rendering mode that mitigates the disadvantages of real-time features with physically correct and compromise-free global illumination, reflection and refraction of materials, and more.

If you prefer video tutorials, head on over to the Epic Developer Community, where you’ll find hundreds of hours of free online learning, including these courses on ray tracing.

In addition, you can learn about another ray-tracing technique that’s available in Unreal Engine called path tracing, a progressive, hardware-accelerated rendering mode that mitigates the disadvantages of real-time features with physically correct and compromise-free global illumination, reflection and refraction of materials, and more.

Courtesy of ARCHVYZ, design by Toledano Architects